Agentic AI: How Intelligent Agents Will Transform Enterprise Workflows

1 Introduction

AI systems are gaining autonomy. The latest wave—Agentic AI—doesn’t just answer questions or generate content on command. These systems plan, decide, and act on their own to achieve goals you set. Instead of telling AI what to do at each step, you tell it what outcome you want and let it figure out the path.

This shift from reactive to proactive intelligence changes how organizations can deploy AI. Instead of building workflows around what the model can do in a single interaction, you can assign objectives and let the system figure out how to accomplish them. That capability is already reshaping operations in financial services, healthcare, customer support, and software development.

1.1 What Is Agentic AI?

Agentic AI systems act with intent and autonomy to achieve defined goals. The key distinction: they don’t just respond to commands—they pursue objectives.Traditional systems require you to orchestrate each step. Generative systems require you to prompt for each output. Agentic systems let you specify the outcome and trust the system to figure out how to get there.

Take loan processing as an example. Traditional systems route applications through fixed checkpoints. A generative AI chatbot might answer questions about loan requirements. An agentic system actually processes the application—pulling credit data, verifying employment, calculating risk-adjusted pricing, checking compliance rules, and routing exceptions to human reviewers when needed. The difference isn’t just scale. It’s whether the system can navigate complexity without constant human intervention.

| AI Type | What It Does | Key Limitation |

|---|---|---|

| Traditional AI | Executes predefined rules or predictions from trained models | Can’t adapt to tasks outside its training scope |

| Generative AI | Creates content (text, images, code) based on learned patterns | Reactive: requires explicit prompts for each step |

| Agentic AI | Pursues goals through multi-step planning and decision-making | Requires oversight to prevent unintended autonomous actions |

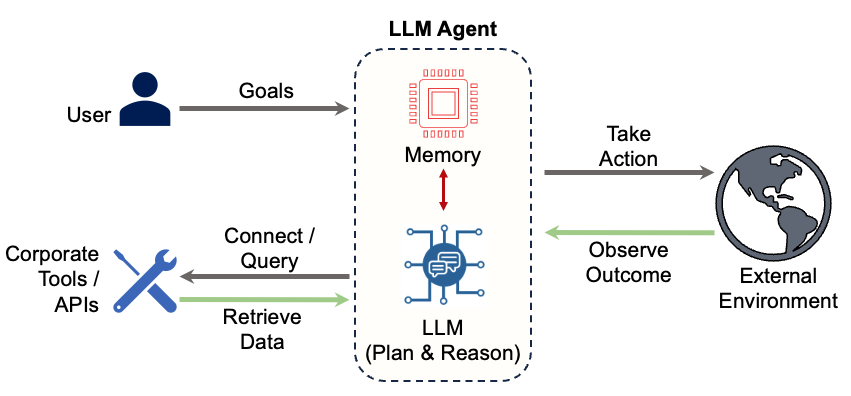

Agentic systems maintain context across interactions (memory), break complex goals into subtasks (planning), access external tools and data sources (tool use), and improve based on outcomes (learning). Earlier AI generations handled these separately—or couldn’t do them at all.

2 How Agentic AI Works

Agentic systems run in a continuous cycle—perceiving their environment, reasoning about options, planning actions, executing through tools, and learning from results. The cycle runs continuously, adapting as conditions change.

Perceive (Data Retrieval) — The agent pulls data from available sources: databases, APIs, document repositories, or user inputs. It keeps relevant context in memory from past interactions.

Reason — A reasoning engine (usually a large language model) analyzes the current state, evaluates options, and determines the best approach given the goal and constraints. It weighs tradeoffs, anticipates consequences, and selects strategies.

Plan — The planner breaks complex objectives into discrete tasks, sequences them logically, and identifies dependencies. For a loan approval goal, the agent might plan to: verify identity, pull credit history, calculate debt-to-income ratio, check against underwriting guidelines, and route to human review if red flags emerge. Each step depends on the previous one, and the agent adjusts the sequence if something fails.

Act (Tool Use & Action) — Tool-use capabilities let the agent execute planned tasks by interacting with external systems. It might call an identity verification API, query a credit bureau database, run a calculation, update a CRM record, or send a notification. The agent chooses which tools to use based on what each step requires.

Observe & Learn — The system captures feedback and adjusts its behavior for next time. If a particular sequence of checks consistently flags false positives, the system refines its approach. If certain customer segments respond better to specific communication timing, the agent adapts its strategy.

Each interaction informs the next. A customer service agent might start with a standard response template, detect frustration in the customer’s reply, shift to a different communication strategy, pull additional account context, and adjust its tone—all within a single conversation thread.

These pieces—perception, reasoning, planning, action, learning—work together, not in isolation.

3 Agentic Design Patterns

Agentic systems rely on core design patterns. Each solves a different problem, and real systems combine them. Understanding these five patterns helps you choose the right architecture for your use case.

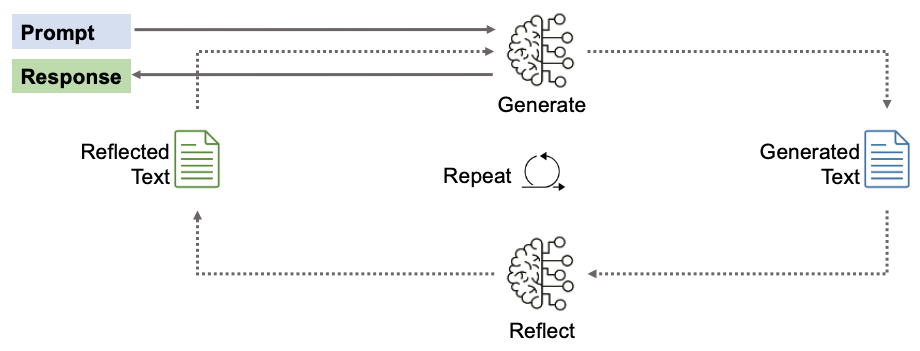

3.1 Reflection

An agent that can’t critique its own output is just automation with extra steps. Reflection lets agents evaluate and improve their outputs before delivering them—the same quality check that humans do.

Research shows that even state-of-the-art models like GPT-4 improve their outputs by ~20% through iterative self-feedback.[1] The key is structured evaluation criteria—specific feedback on what needs improvement drives better refinements than generic critique.

Best for: Tasks where you need to check and improve quality before delivering. It works well for regulatory reports, customer communications, and compliance documents that have clear quality standards.

A customer service agent might review its drafted response for tone, clarity, and alignment with brand guidelines before sending. A credit analysis agent might validate whether its risk assessment considered all required factors and whether calculations are correct. Each iteration catches errors that would otherwise reach customers or regulators.

Without reflection, agents repeat mistakes. With it, they improve with each iteration and maintain the quality standards banking requires.

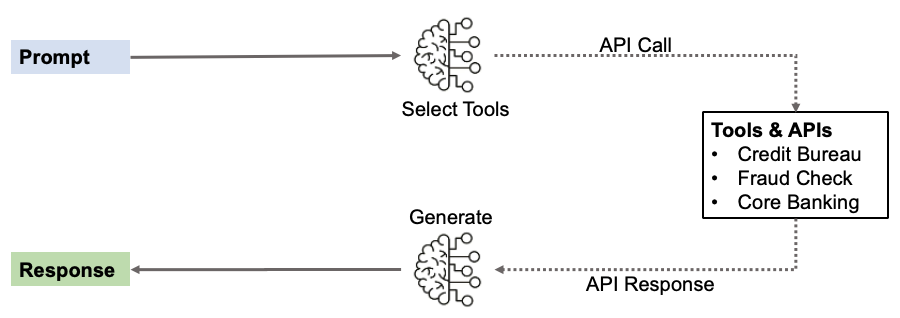

3.2 Tool Use

Agents without access to external systems are limited to what they learned during training. Tool Use gives agents direct access to your APIs, databases, and systems to ground their actions in current, accurate information.

Research demonstrates that LLMs cannot reliably self-verify factual information—external tools provide the ground truth needed for accurate verification, improving performance by 7-8% points over self-critique alone.2

Best for: Tasks requiring access to external systems, current information, or specialized capabilities. Essential when agents need to bridge the gap between reasoning and real-world action.

The agent decides when to use which tool based on task requirements. A loan processing agent might call a credit bureau for credit history, query a fraud detection system for risk indicators, check compliance databases for regulatory requirements, and update the core banking system with the decision—all without human coordination at each step.

Tool use breaks agents free from their training data limitations. Now they can interact with the real systems that run your business.

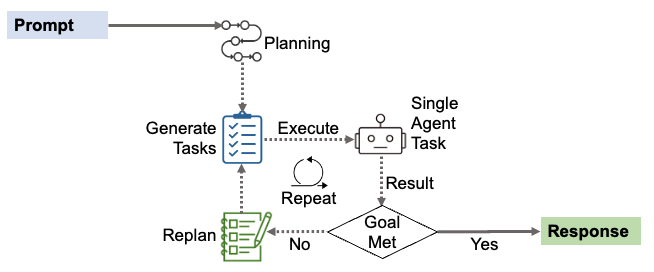

3.3 Planning

Complex tasks need a plan.. Without planning, agents jump between tasks or skip steps. Planning breaks complex problems into sequences—agents map the approach before executing.

Best for: Multi-step problems where order matters and where breaking the problem into phases improves success rates. Essential when tasks have dependencies or when parallel execution can improve efficiency.

A commercial loan application requires multiple verification steps: document extraction, financial analysis, credit scoring, industry benchmarking, and collateral valuation. Effective planning manages dependencies—financial analysis can’t begin until documents are extracted, but once complete, credit scoring, benchmarking, and collateral valuation can run in parallel. The agent plans this execution strategy upfront rather than discovering dependencies through trial and error.

Planning prevents wasted work from executing tasks in the wrong order or repeating steps. In banking, where each verification may involve expensive API calls or human review, planning the optimal execution path saves both time and cost.

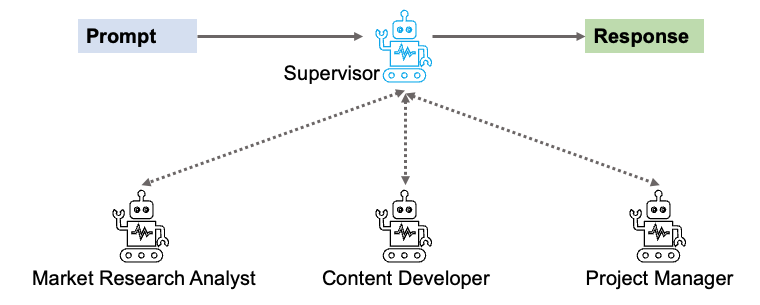

3.4 Multi-Agent Collaboration

Some problems need multiple specialists. No single agent has all the expertise required. The Multi-agent pattern coordinates specialized agents to tackle what individual agents can’t handle alone.

Research demonstrates that multi-agent debate significantly improves performance over single agents, with accuracy gains of 7-16% points across reasoning and factuality tasks when agents critique and refine each other’s outputs.3

Best for: Complex problems requiring specialized expertise where task decomposition provides clear benefits.

Multi-agent systems are expensive, high-latency, and difficult to debug. Reserve for tasks where the benefits of specialization clearly outweigh the operational complexity.

Take an example of fraud investigation. One agent analyzes transactions, another checks customer history, a third searches fraud databases. A supervisor coordinates their findings. Each brings different expertise, and together they spot patterns no single agent would miss.

Multi-agent systems work like loan committees—different specialists bring different expertise. But they’re expensive to maintain. When something breaks, you might trace through 10-50+ agent calls to find the problem. Don’t go multi-agent unless simpler patterns can’t handle it.

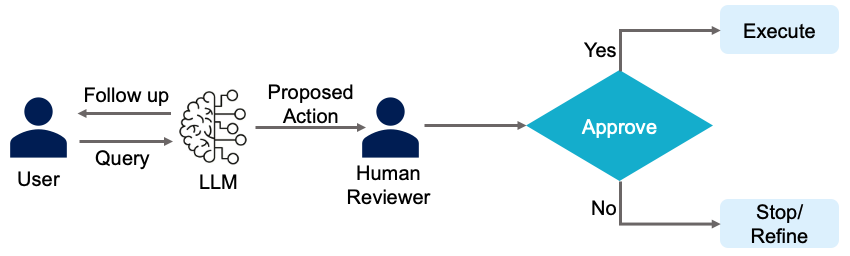

3.5 Human in the Loop

For high-stakes decisions, full automation isn’t always appropriate—or legal. Human-in-the-loop lets agents handle analysis and recommendations while humans make final decisions at critical checkpoints. This meets regulatory requirements and still delivers real efficiency gains.

Best for: High-stakes decisions where errors have serious consequences or regulatory requirements mandate human oversight. Essential when accountability must rest with humans, not algorithms.

Loan approvals, credit limit increases, account closures, fraud confirmations, compliance violations—any decision that significantly affects a customer’s financial life requires human oversight. It’s not optional in banking. In many cases, it’s legally required.

Take loan underwriting. An agent analyzes credit history, calculates risk scores, checks compliance requirements, and recommends approval terms with detailed reasoning. A human underwriter reviews the analysis and makes the final decision. The agent handles the analysis. The human applies judgment and takes responsibility.

Or fraud investigation. An agent flags suspicious transactions, gathers evidence from multiple systems, and analyzes patterns against known fraud schemes. A human fraud analyst reviews the evidence, considers customer context, and decides whether to block transactions. The agent accelerates investigation. The human prevents false positives that damage customer relationships.

This isn’t optional in banking. Human-in-the-loop maintains regulatory compliance, preserves accountability, and prevents reputational damage from bad automated decisions. In many cases, it’s legally required.

4 Pattern Selection with Banking Examples

Not every problem needs full autonomy. Here’s how to decide:

4.1 Quick Selection Guide

| Use Case | Use This Pattern | Example |

|---|---|---|

| Predictable multi-step workflow | Planning + Tool Use | Account opening: KYC → credit check → document generation |

| High-stakes decision requiring approval | Planning + Human in Loop | Loan approval: agent analyzes risk, human approves |

| Output needs quality control | Reflection + Tool Use | Regulatory reports: draft → self-review → submit |

| Requires specialized expertise | Multi-Agent (use sparingly) | Fraud investigation: separate agents for transactions, history, and threat analysis |

| Tasks evolve step by step | ReAct + Tool Use | Suspicious activity monitoring: assess → investigate → escalate if needed |

4.2 Banking Essentials

- Human-in-Loop is mandatory for loan approvals, fraud detection, or any decision affecting customer finances.

- Reflection is essential for regulatory reports and compliance documents—quality checks aren’t optional.

- Audit trails are required for every agent decision. Regulators will ask.

Start simple. Use the simplest pattern that solves your problem. Add complexity only with clear evidence it’s needed. Multi-agent systems can require tracing 10–50+ LLM calls to debug a single failure—don’t go there unless you must.

5 Where Agentic AI Works Today

Leading organizations are moving beyond pilots to deploy agentic systems that deliver real productivity gains.

Financial Services

BNY Mellon launched Eliza, an internal AI platform now used by 96% of the bank’s 50,000 employees. The platform now has over 100 AI agents running tasks from payment processing to code repair.8,9

McKinsey’s QuantumBlack Labs automated credit memo drafting for a bank using agentic workflows. An LLM manager created work plans and assigned tasks to specialized sub-agents for data analysis and verification. Credit analyst productivity jumped as much as 60%. 11

Software Development

JM Family built a multi-agent system for software development—specialized agents handle requirements, coding, documentation, and QA — all managed by a coordinating agent. 6

GitLab integrates AI agents across the entire software lifecycle to automate repetitive coding tasks. When Ally Bank implemented this system, they increased their ability to deploy software updates by 55%, significantly accelerating their development cycle.12

Business Operations

Salesforce’s Agentforce platform lets companies deploy autonomous agents for multi-step tasks: resolving support tickets, scheduling meetings, qualifying sales leads.13

Fujitsu cut sales proposal production time by 67% using AI agents through Microsoft’s Azure AI Agent Services.5,6

Cybersecurity

Darktrace uses autonomous agents to detect and stop cyberthreats in real time. The agents monitor network traffic continuously, spot anomalies, and decide how to respond in real time — without human intervention.14

6 References

[1] Madaan, A. et al. (2023). SELF-REFINE: Iterative Refinement with Self-Feedback. https://arxiv.org/pdf/2303.17651

[2] Gou, Z. et. al. (2024) CRITIC: Large Language Models Can Self-Correct with Tool-Interactive Critiquing. https://arxiv.org/pdf/2305.11738

[3] Du, Y., Li et. al. (2023). Improving Factuality and Reasoning in Language Models through Multiagent Debate. https://arxiv.org/pdf/2305.14325

[4] Yongliang Shen et. al. HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face. https://arxiv.org/pdf/2303.17580

[5] Microsoft. (2025). Fujitsu is revolutionizing sales efficiency with Azure AI Agent Service. https://www.microsoft.com/en/customers/story/21885-fujitsu-azure-ai-foundry

[6] Microsoft. (2025). Agent Factory: The new era of agentic AI—common use cases and design patterns. https://azure.microsoft.com/en-us/blog/agent-factory-the-new-era-of-agentic-ai-common-use-cases-and-design-patterns/

[7] Morgan Stanley. (2023-2024). AI @ Morgan Stanley Platform. https://www.morganstanley.com/press-releases/morgan-stanley-research-announces-askresearchgpt

[8] BNY Mellon. (2025). Artificial Intelligence at BNY. https://www.bny.com/corporate/global/en/about-us/technology-innovation/artificial-intelligence.html

[9] PYMNTS. (2025). BNY Says 96% of Employees Use In-House AI Platform. https://www.pymnts.com/news/artificial-intelligence/2025/bny-says-96percent-employees-use-in-house-ai-platform/

[10] McKinsey Technology Trends Outlook 2025. https://www.mckinsey.com/capabilities/tech-and-ai/our-insights/the-top-trends-in-tech

[11] Delivering tangible business impact from generative AI. https://medium.com/quantumblack/delivering-tangible-business-impact-from-generative-ai-ccc878ab24bc

[12] Ally Financial cuts pipeline outages and eases security scanning with GitLab. https://about.gitlab.com/customers/ally/

[13] Salesforce launches Agentforce 2dx with new capabilities to embed proactive agentic AI into any workflow, create multimodal experiences,and extend digital labor throughout the enterprise,” Salesforce press release, March 5, 2025.

[14] Darktrace’s AI Transforms Global Cybersecurity Landscape. https://cybermagazine.com/technology-and-ai/how-darktraces-self-learning-ai-is-redefining-cybersecurity