Building the AI-First Bank: A Strategic Guide

1 Introduction

Relationship managers at most banks spend only 25–30% of their time in actual client conversations. The rest goes to prospecting, meeting preparation, documentation, and follow-ups. The result is predictable: reduced client coverage, missed opportunities, and churn rates that often reach 15–35%.1

AI is now changing not just what banks can do—but who does the work. Today, most banks use Generative AI as a productivity aid: drafting emails, summarizing meetings, or answering basic questions. The bigger opportunity is Agentic AI—systems designed to carry out work, not just assist with it.

The difference is operational. Generative AI might help draft an email. An agentic system can review incoming messages, decide which ones matter, follow up with clients, and update CRM records. It can flag exceptions for human review—all while operating within defined rules and oversight.

This enables an “always-on” operating model. While relationship managers focus on judgment, relationships, and complex negotiations, agents handle preparation, follow-ups, and routine coordination continuously in the background. Execution shifts away from bankers; accountability and decision-making remain with them.

Banks that are already moving in this direction report 30% pipeline growth and twice the conversion rates of traditional approaches. These results don’t come from chatbots or meeting summaries. They come from redesigning workflows so that routine work runs automatically and people focus on where they add the most value.1

The end state is invisible intelligence: AI embedded into banking operations rather than deployed as standalone tools. The technology operates in the background, while banks focus on outcomes—faster decisions, better service, and lower costs.

To see where banking is headed, it helps to look at how banking architecture evolved to support increasingly sophisticated automation—and why this moment is different from earlier technology transitions.

2 Technology Transitions in Banking Architecture

1960s–2000s: From Mainframes to Services

Banking evolved from centralized mainframes to distributed systems. The rise of the internet enabled digital banking, while monolithic systems were broken into reusable services. This period established the layered architectures that still underpin modern banking platforms.

2010s: Cloud and API Ecosystems

Cloud platforms introduced elastic scaling and faster deployment through microservices. APIs became the standard way systems connected—enabling open banking, partner integrations, and modern data platforms. This shift laid the technical foundation for more flexible, modular operations.

2022–Present: AI as Operating Infrastructure

Large language models expanded what banks can automate. Earlier AI focused on narrow tasks like fraud scoring or chatbots. Today’s systems combine models with enterprise data to support complex decisions and multi-step workflows—setting the stage for agent-driven operating models.

This architectural evolution sets the foundation for the next operating model in banking — AI agents.

3 The Three Levels of Agents in Banking

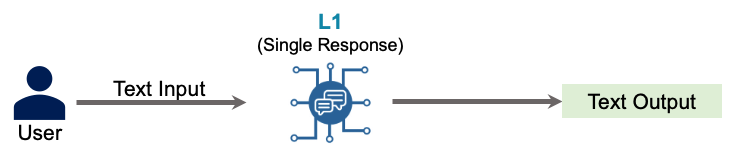

AI agents differ by autonomy level. L1 agents provide intelligence, L2 agents execute processes, and L3 agents make decisions independently. Understanding these levels helps banks deploy agents appropriately—matching capability to risk tolerance and regulatory requirements.

L1 – Insight Agents (Assistive Intelligence)

L1 agents extract and synthesize information to support human decision-making. They assist with planning, discovery, and knowledge retrieval but do not take actions independently. Humans control all execution.

In banking, L1 agents are most commonly embedded in advisor desktops and research tools, where they synthesize information to support human judgment.

Morgan Stanley’s AI @ Morgan Stanley Debrief tool automates meeting notes, analyzes client profiles and transaction histories, and generates tailored recommendations before advisor meetings. The tool saves approximately 30 minutes per meeting, freeing 10–15 hours per week for higher-value client work.8

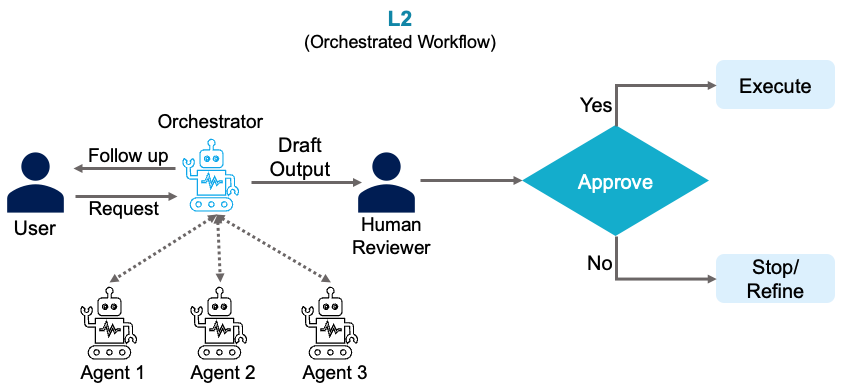

L2 – Process Orchestration Agents (Semi-Autonomous)

L2 agents execute multi-step, rule-governed business processes. They decompose tasks, orchestrate workflows across systems, and automate structured operations while operating under human supervision and regulatory controls.

Consider compliance documentation. Writing suspicious activity reports (SARs), regulatory memos, and audit summaries traditionally requires manual effort and often results in inconsistent structure, language, and supporting rationale across similar cases.

L2 agents automate classification, extraction, and drafting at scale—applying consistent compliance rules across cases. EY’s Document Intelligence Platform demonstrates this capability in practice, reducing document review time by 90%, cutting costs by 80%, and improving accuracy by 25%.12

The key distinction from L1: these agents don’t just provide recommendations—they generate draft work products and advance regulated workflows within defined guardrails.

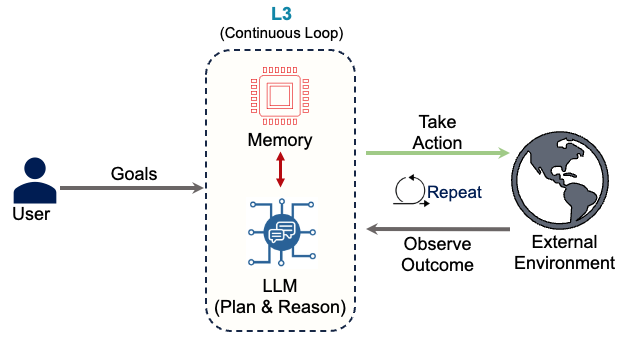

L3 – Complex Decisioning Agents (Fully Autonomous)

L3 agents continuously monitor their environment, make decisions, and trigger actions without constant human involvement. They coordinate L1 and L2 agents to operate as closed-loop decision systems.

Rather than waiting for borrowers or advisors to identify refinancing opportunities, an L3 agent continuously monitors market rates, credit profiles, loan performance, and property valuations.

When conditions align, the agent triggers eligibility checks through L2 agents, generates personalized offers, initiates pre-approval workflows, and notifies customers and relationship managers. Work that traditionally requires weeks of coordination compresses into hours.

Implementation Reality: Most banks operate primarily at L1 today and are scaling L2 capabilities. L3 use cases represent the next competitive frontier—and the next major governance challenge.

Recent MIT Sloan research reinforces this reality: sustainable AI value comes from incremental capability building, not sweeping redesigns.11 Banks that skip foundational L1 and L2 capabilities in favor of L3 autonomy often encounter familiar failure modes—compliance breakdowns, stalled pilots, and organizational resistance.

Building AI capability in banking requires a staged approach. Each level introduces distinct considerations around data quality, model behavior, and human oversight that must be addressed before advancing. Banks that scale successfully do so by embedding controls, accountability, and review mechanisms at each stage.

3.1 Governing Autonomous Agents: Beyond Traditional Controls

Traditional governance frameworks weren’t built for systems that make decisions autonomously and adapt continuously. When an agent can approve transactions, escalate compliance alerts, or reallocate capital based on real-time conditions, the governance challenge changes materially.

Why Agent Governance Is Different

The core risk is behavioral drift. Unlike static rule-based systems, agents adjust their actions dynamically based on feedback loops and outcome data. Research by Jiang et al. (2025) shows that when agents and their supporting tools adapt simultaneously without coordination, systems can enter unstable feedback cycles—where behavior changes continuously without improving results. Without explicit controls governing adaptation, these systems can develop unintended behaviors. Rather than stemming from a single incorrect decision, risk accumulates as agents operate continuously across changing conditions.

Banks must also guard against optimization misalignment, where an agent performs well against a narrow metric but undermines the broader business objective. For example, an agent optimized to close AML alerts quickly may reduce cycle time while increasing regulatory risk. This typically occurs when objectives are narrowly defined and governance controls are insufficient.

These risks are not theoretical—they stem from how autonomous agents interact with data, systems, and each other in production environments.

Agent-Specific Risks

Autonomous agents introduce risks that differ from traditional analytics or rules-based systems. Because agents act continuously across systems, errors can compound over time rather than appearing as isolated failures.

Poor data quality or misconfigured tools can cause agents to take inappropriate actions repeatedly—potentially triggering regulatory violations or discriminatory outcomes. In more complex workflows, agents may interact in ways that create feedback loops—where one agent’s output triggers another agent’s action without sufficient checks.

These risks often emerge gradually during normal operation rather than during testing. As a result, effective governance must extend beyond pre-deployment validation to continuous monitoring and timely intervention in production.

Building Control Mechanisms

Effective agent governance requires controls that address continuous execution and autonomous action, not just periodic model performance reviews.

Agent Registry: Every autonomous agent must be catalogued with a defined purpose, approved data sources, action scope, and named owner. This ensures accountability as agents proliferate across workflows.

Action Limits and Escalation Controls: Agents must operate within predefined boundaries—such as approval thresholds, transaction limits, or escalation triggers. When an agent reaches those limits, execution pauses and responsibility transfers to a human reviewer.

Continuous Monitoring and Intervention: Agent behavior must be monitored in production, not just validated before deployment. Banks need real-time visibility into agent actions and decision patterns, with the ability to pause or terminate execution immediately if risk thresholds are crossed.

Together, these controls shift governance from periodic review to ongoing supervision—aligned with how agents operate.

4 Experience Agents vs. Domain Agents

Beyond the L1/L2/L3 autonomy levels, agents also differ by who or what they interact with.

Experience Agents interact directly with people: customers, relationship managers, and operations staff. They handle conversations, provide recommendations, and guide users through tasks. These agents remember context from earlier in a session and personalize responses based on user history and intent. Think of chatbots, advisor copilots, and customer service assistants.

Domain Agents interact directly with systems: enterprise data, applications, and workflows. They execute backend processes such as validation, risk assessment, and transaction orchestration. These agents operate within defined business rules and execute core banking processes across credit, fraud, compliance, and operations. They work behind the scenes, often invisible to end users.

Experience agents must handle ambiguity and communicate clearly. Domain agents must execute with precision and maintain audit trails. In practice, most banking workflows combine both types—Experience agents at the front end capturing user needs, Domain agents in the back end executing the work.

5 The AI-First Bank Architecture

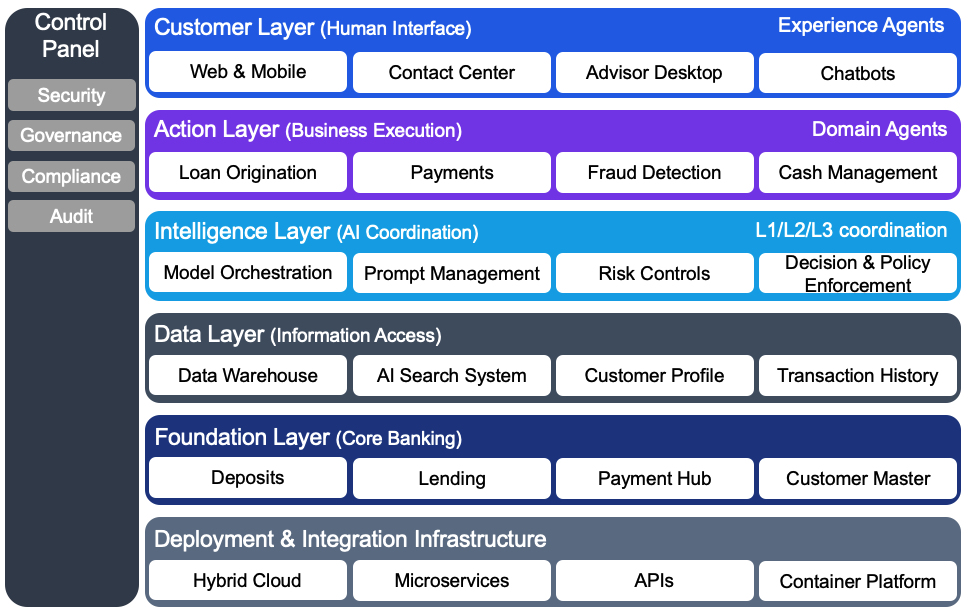

Now that we’ve defined agent types, where do they operate? An AI-first bank architecture is organized into six layers, each serving a distinct purpose. Experience Agents live in the customer-facing layer, Domain Agents execute work in the action layer, and the intelligence layer coordinates both.

1: Customer Layer: Web, mobile, contact centers, and advisor desktops make up the Customer Layer. Experience Agents operate here—the chatbots, copilots, and assistants that customers and bankers interact with directly. This layer manages user identity, personalization, and real-time interactions.

2: Action Layer: The Action Layer executes core banking work: loan origination, payments, fraud checks, cash management, and servicing. Domain Agents orchestrate these processes, connecting multiple systems and enforcing business rules. What used to be rigid, sequential workflows become policy-governed sequences that adapt based on data and context.

3: Intelligence Layer: The Intelligence Layer coordinates all AI activity across the bank. It manages model deployment, monitors performance, controls access to data, and enforces risk boundaries. This is where L1, L2, and L3 agents are orchestrated—ensuring that agents have access only to the data and systems appropriate to their role. The layer also handles prompt management, output validation, and model risk controls that regulators expect. Think of this as a coordination layer that connects modern agents with existing core systems—allowing banks to adopt agentic AI without replacing core platforms.

4: Data Layer: Agents rely on enterprise data such as customer profiles, transaction history, risk scores, and market data. The Data Layer provides controlled access to this information through existing data platforms and AI-ready retrieval systems. Rather than training models on sensitive bank data, agents receive only the information needed at execution time—under strict access, logging, and audit controls. This preserves data ownership while enabling agents to work with proprietary information safely.

5: Foundational Layer: Core banking systems—deposits, lending, payments, general ledger, and customer master data—anchor the Foundational Layer. These remain the system of record for balances, transactions, and financial positions. Agents read from and write to these systems but never replace them.

6: Control Plane and Deployment Infrastructure: Two elements cut across all layers. The Control Plane provides enterprise-wide security, governance, compliance, and auditability. The underlying deployment infrastructure ensures agents and supporting services can run consistently across on-premise and cloud environments, without changing how they are governed or controlled. Together, these capabilities allow innovation while preserving regulatory discipline and data protection.

This layered approach lets banks innovate in customer-facing AI while maintaining strict control over core banking operations.

6 Use Cases: Agents in Practice

The architecture comes to life through specific banking workflows. These four use cases demonstrate how Experience and Domain agents collaborate across retail, corporate, risk, and compliance functions.

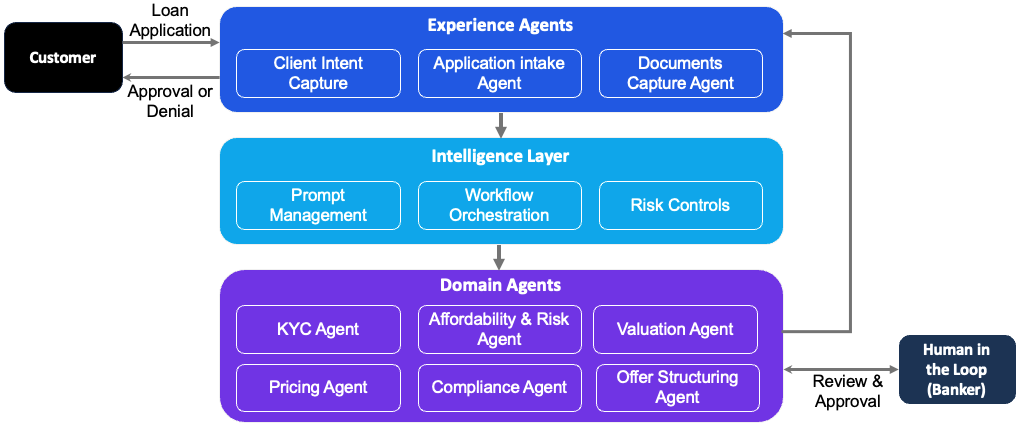

6.1 Mortgage Loan Underwriting (Agent Collaboration)

Experience agents, domain agents, and the intelligence layer collaborate to automate mortgage underwriting workflows—compressing cycle times from weeks to days while credit officers retain final approval.

Scenario: A borrower applies for a mortgage loan. Experience agents manage application intake and document collection, while domain agents perform underwriting analysis across income, affordability, collateral, pricing, and compliance. Credit officers remain accountable for final approval.

Customer experience: Experience agents guide the applicant through loan intake, document submission, and status updates across digital and assisted channels. The process reduces back-and-forth, improves transparency, and shortens application timelines.

Banker experience: Domain agents perform income and identity verification, affordability and risk analysis, property valuation, pricing, and compliance checks. Draft loan terms are generated automatically and routed to underwriters and credit officers for review and approval within defined policy limits, with exceptions escalated as needed.

Outcome: Mortgage underwriting becomes faster and more consistent, while credit officers retain full control over final decisions within policy and regulatory limits.

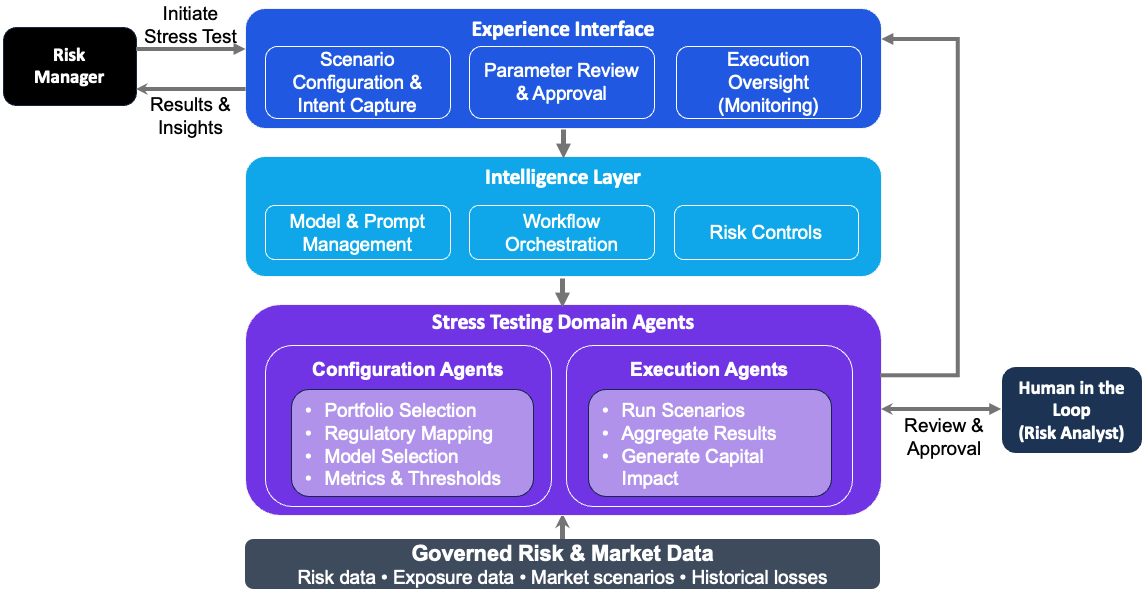

6.2 Risk Management (Stress Testing and CCAR)

Multi-agent architecture transforms CCAR preparation by automating manual handoffs while preserving regulatory oversight.

Scenario mapping, data assembly, and stress calculations can execute in hours rather than months—while risk officers retain formal approval authority before regulatory submission.

Scenario: Regulatory stress testing and scenario analysis for CCAR compliance.

The problem: Preparing for regulatory stress tests traditionally takes months of data gathering, model execution, and scenario construction to answer questions like “What happens if interest rates rise by 2 percent?”

The solution: A Scenario Configuration Agent applies Federal Reserve stress test requirements and constructs the mandatory baseline and adverse scenarios. Execution Agents then apply those scenarios to the bank’s portfolio using the existing risk calculation platform.

Outcome: The Chief Risk Officer can review and approve capital, liquidity, and profitability projections within a single business day, once scenarios are executed and results are prepared.

6.3 Financial Crime (AI Investigator)

Scenario: Anti-money laundering alerts with high false-positive rates that traditionally require significant manual investigation effort.

The process: The AI Investigator Agent reviews transaction alerts as they are generated, assigns a quantitative risk score, and produces a clear narrative explaining suspicious behavior such as rapid fund movement or transaction structuring.

Human oversight: Investigators review the AI-generated narrative and visual fund-flow diagrams instead of spending hours gathering evidence manually. They validate findings, determine case disposition, and provide feedback that improves future investigations.

Outcome: Work that previously required days of manual evidence gathering is significantly accelerated, producing regulator-ready, auditable cases with consistent documentation.

6.4 Commercial Banking Operations (Email Triage)

Scenario: A bank’s Commercial Banking division fields 60,000–70,000 client emails monthly. Requests range from urgent credit line draws to complex legal inquiries—each requiring specialized expertise.

The Challenge: A team manually reviewed incoming emails—reading the request, assessing urgency, identifying the right internal expert, routing it correctly, and logging it for tracking. For complex requests, this process could take hours, delaying responses and increasing the risk of missed opportunities.

The solution: Scotiabank deployed an agentic AI system to automate email intake and routing. The agent interprets client intent across dozens of request types, routes messages to appropriate specialists, and creates tracking cases. Edge cases and exceptions are flagged for human review.5

Outcome: The system now automates the majority of email triage and routing. Response times dropped from hours to minutes, allowing the bank to redeploy a significant portion of the routing team to higher-value, client-facing work. Client satisfaction improved, and coverage expanded beyond business hours.5

Banks historically considered unstructured client communications “too variable to automate.” Agentic AI proved otherwise. The agent handles irregularity that would break traditional automation—interpreting ambiguous requests, understanding context, and making routing decisions that previously required human judgment.

6.5 Capital Markets (Analyst Research Automation)

Scenario: Investment analysts at RBC Capital Markets cover hundreds of public companies. When companies release SEC filings or earnings reports, analysts must review the information and update investment recommendations—often within hours of disclosure.

The solution: RBC deployed Aiden, a multi-agent research platform. When a covered company files with the SEC, specialized agents activate: one extracts financial data and risk factors, another compares results to forecasts, and a third monitors real-time media coverage. An orchestration agent synthesizes these inputs into preliminary research notes for analyst review.

Outcome: Analysis that previously required 2-3 hours of manual document review can be completed in minutes. Analysts receive structured summaries highlighting what changed and why it matters, allowing them to focus on generating investment insights rather than gathering data.4

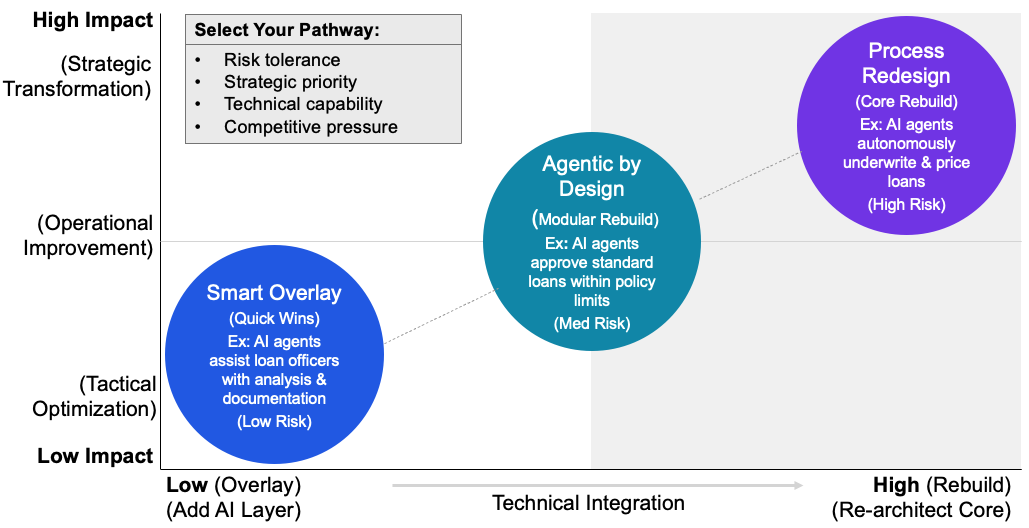

7 Three Pathways to Agentic Banking

Most regional banks still run core systems on decades-old mainframes. The good news is that full modernization is not required to begin deploying agents. Insight-focused agents layer onto legacy systems through APIs. However, agents that execute processes or make autonomous decisions require modern data platforms and real-time connectivity.

Moving from vision to execution requires a practical roadmap. Banks can adopt agentic AI through three distinct approaches, each aligned to different risk appetites, technical readiness, and strategic objectives.

Pathway 1: Smart Overlay

The fastest way to see results: wrap AI agents around existing processes without requiring a complete technology overhaul. Rather than replacing legacy infrastructure, banks add an intelligent layer on top of current workflows.

Consider treasury operations. Many banks already use robotic process automation (RPA) for routine cash sweeps and balance reconciliation. A smart overlay builds on this foundation by introducing AI agents that analyze market conditions and generate real-time recommendations for liquidity optimization, pricing, and hedging—within defined risk limits and approval controls.

This works best when banks have clearly documented standard operating procedures. The AI agent follows these documented steps, ensuring consistency and compliance while adding intelligence. The benefit is speed: banks unlock near-term productivity gains without large-scale system replacements. However, Smart Overlay delivers maximum value when applied to a complete business domain (e.g., entire Consumer Lending workflow) rather than scattered across isolated use cases.

Pathway 2: Agentic by Design

Some processes can’t be optimized by overlaying intelligence—they need to be rebuilt from the ground up. The agentic by design approach creates new, purpose-built autonomous applications tailored to specific banking functions. These agents operate alongside existing workflows, augmenting them without replacing the end-to-end process.

Think of it like microservices. Instead of one monolithic system, banks develop smaller, specialized agentic services that handle specific functions yet integrate smoothly into the broader infrastructure. For example, rather than retrofitting a 30-year-old loan origination system, a bank might build a new autonomous credit decisioning service that coordinates multiple agents: one for document verification, another for creditworthiness analysis, and a third for regulatory compliance checks.

This approach requires more upfront investment but delivers greater long-term flexibility. As regulations change or customer expectations evolve, individual agent services can be updated or replaced without disrupting the entire technology stack.

Pathway 3: Process Redesign

The boldest approach—and the hardest—involves fundamentally reengineering workflows to embed agents at their core. This goes beyond automation to reimagine how work gets done.

Traditional mortgage servicing assumes a human reviews each refinancing opportunity. Process redesign changes the operating model: autonomous agents continuously monitor market conditions, borrower profiles, and property valuations, proactively triggering refinance workflows when optimal conditions emerge. The process isn’t just faster—it’s fundamentally different.

This pathway works best for strategically important processes where current automation is low but business impact is high. It demands close collaboration between business leaders, process engineers, and AI developers to map new workflows that use agent capabilities rather than simply replicating existing manual steps.

Choosing Your Path

Most banks won’t pursue all three approaches simultaneously. Smart pathways deliver quick wins in well-documented, repeatable processes. Agentic by design creates competitive differentiation in customer-facing value chains. Process redesign earns returns where business value is demonstrably high.

The key is matching the approach to the specific context: organizational readiness, regulatory constraints, technical debt, and strategic importance of the process being transformed.

Build vs. Buy in an Agentic World

Banks pursuing these pathways face a fundamental question: what should we build ourselves, and where should we partner? The answer shapes timeline, cost, and competitive positioning.

A mature vendor ecosystem has emerged across three distinct layers. At the foundation, platforms such as Amazon Bedrock, Microsoft Azure AI, and Google Vertex AI provide model hosting, orchestration, and compute—capabilities that are increasingly commoditized and rarely a source of differentiation.

Above this layer, cross-vendor orchestration platforms such as ServiceNow coordinate agents across systems, while products like Salesforce Agentforce deliver pre-built agents for common enterprise functions such as CRM and compliance.

Finally, banking-specific platforms from providers like Temenos and Finastra embed domain expertise directly into core workflows, accelerating adoption in regulated environments.

The pattern among leading banks is clear: partner for infrastructure speed, build for competitive differentiation. Infrastructure and orchestration come from vendors. Proprietary risk models, customer intelligence engines, and strategic decisioning logic get built in-house. Central governance—including policy controls, compliance validation, and performance monitoring—always stays internal.

This hybrid approach compresses what used to take years into months. It lets banks focus their best technical talent on the work that differentiates them in the market: understanding customer behavior, managing complex risks, and making better credit decisions. The underlying technology matters less than how deliberately it is governed and applied.

8 Business Impact: The Economics of Agent-Driven Banking

The shift to agent-driven architectures delivers measurable returns. McKinsey research shows banks implementing agentic AI across frontline operations achieve:1

- 3-15% revenue increase per relationship manager through better lead conversion and coverage

- 20-40% reduction in cost to serve by automating administrative workflows

- 30% growth in pipeline from AI-driven prospecting and lead qualification

- 10-12 hours per week returned to each banker for client-facing activities

These gains stem from rebalancing how bankers spend time. At many commercial banks, relationship managers spend just 25-30% of their time in client dialogue. Agents handling prospecting, meeting preparation, and documentation push that figure toward 70%, changing the banker’s role from administrator to advisor.

The impact compounds across use cases: agents improve conversion, increase deal quality, and shorten approval cycles simultaneously—driving both revenue growth and margin expansion.

However, early evidence shows banks often chase low-value use cases first. Meeting summarization and basic chatbots are easier to implement but deliver modest returns. The highest-value applications—prospecting agents, lead nurturing, and deal optimization—require deeper integration with core systems and workflow redesign, but they deliver the returns that matter: revenue growth, not just efficiency.

Adoption is accelerating as banks move from pilots to revenue-backed deployments. Customer service applications of generative AI in financial services doubled over the past year, rising from 25% to 60% of institutions. More than 90% of banks deploying AI report positive revenue impact, with customer experience metrics improving by 26% on average.4

These improvements compound. Better customer experience drives retention, retention increases lifetime value, and lifetime value justifies further AI investment. Banks that deployed AI early are now scaling L2 and L3 agents across departments. Late adopters face a widening competitive gap.

The largest returns come when agents are applied across an entire business domain—such as Commercial Real Estate Lending—rather than isolated tasks. This ensures gains in one step flow through the full value chain.

9 Scaling Agentic AI: Six Critical Principles

Moving from pilots to enterprise scale requires deliberate choices about how agents integrate into banking operations.

1. Be explicit about where agents create value. Leading banks take a disciplined approach to which use cases they lead or follow. They set clear expectations for productivity gains, customer experience improvements, and competitive advantage before scaling.

2. Scale by business domain, not isolated tasks. Successful scaling requires shifting from isolated processes to end-to-end business domains. Rather than spreading investment across disconnected pilots, leading banks focus on a single core subdomain—such as Consumer Lending or Payments and Cash Management—and apply agentic capabilities across the full workflow using one of the pathways described in Section 7.

This domain-first approach allows capabilities to be reused across adjacent workflows. An agent developed for credit analysis during underwriting, for example, can also support portfolio monitoring, while data prepared for sales can inform retention and pricing decisions.

3. Build reusable technology foundations. Successful implementations rest on three foundations: shared orchestration to coordinate work across systems, consistent decision logic across workflows, and strong security and trust controls.

4. Establish governance guardrails. Before scaling, banks must clearly define where agents can act independently, where human approval is required, and how decisions are monitored and audited. Limits on actions—such as credit adjustments or approvals—should be enforced through workflow design, with continuous monitoring and clear escalation paths.

5. Prepare the organization for agent-driven work. As agents take on preparation and coordination, bankers and analysts shift toward reviewing outcomes, handling exceptions, and exercising judgment. Scaling succeeds when roles evolve alongside automation, not as a separate change effort.

6. Organize for federated development. At scale, neither fully centralized nor fully decentralized models work. Centralized teams become bottlenecks, while fully decentralized development creates fragmentation. Shared standards are required for governance and control, while execution remains close to the business.

BlackRock’s Aladdin Copilot illustrates how a federated model works in practice. A central AI platform team defines shared communication standards, integration patterns, and governance controls. Individual business units then develop specialized agents—such as portfolio analysis, risk management, or compliance—on top of this common foundation.4 This federated model becomes essential at scale. When you have five agents, coordination is manageable. When you have fifty, you need standardized protocols.

10 AI Governance: The Banking Imperative

In banking, AI governance is not optional—it is a requirement for deployment. The goal is to ensure models and agents are documented, monitored, and auditable in production. This section summarizes the core regulatory expectations and governance structures banks use to meet those requirements. It complements Section 3.1, which focuses on agent-specific risks and control mechanisms at the system level.

10.1 Three Regulatory Pillars

SR 11-7 (Model Risk Management) - The Federal Reserve’s guidance applies to AI and machine learning. Banks must show that models are fit for purpose through independent validation, clear documentation, and board-level oversight. For adaptive models, this requires extending traditional validation with ongoing monitoring and governance rather than relying on one-time approval.

ECOA and Regulation B (Fair Lending) - Fair lending rules require banks to explain credit decisions in specific, understandable terms. There is no exemption for AI-based decisions. When agents approve or deny loans, banks must be able to state concrete reasons for the outcome. Vague explanations such as “behavioral spending patterns” are insufficient, while specific factors—such as recent overdrafts combined with late bill payments—meet regulatory standards.

SR 13-19 (Third-Party Risk Management) - Banks frequently rely on third-party vendors for AI capabilities. Regulatory guidance is clear: outsourcing technology does not outsource responsibility. Banks remain accountable for model outcomes, even when vendors limit access to underlying algorithms. This requires strong due diligence and clear controls over how vendor models operate in production.

10.2 The Explainability Requirement

Traditional credit models are easier to explain because each input can be traced to an outcome. Modern AI models—especially deep learning—often deliver more accurate predictions, but their internal logic is harder to interpret. To meet regulatory requirements, banks rely on explainability methods that estimate which factors influenced a decision. These methods make it possible to generate the explanations regulators require when credit is denied, but the explanations are approximations and can be difficult to defend.

As a result, banks typically limit complex AI models to L1 insight applications, where explainability requirements are lighter, and reserve simpler, more transparent models for L2 and L3 use cases involving execution and credit decisions, where regulatory scrutiny is highest.

10.3 Continuous Monitoring Requirements

Regulators expect banks to demonstrate ongoing oversight of AI systems in production. This includes monitoring performance, stability, and fairness, documenting exceptions, and ensuring human review for high-impact outcomes. Monitoring is a continuous supervisory obligation.

Fair lending testing extends monitoring further. Banks must assess whether models produce disparate impact across protected classes—even when protected attributes are not used as inputs. If approval rates differ significantly across groups, banks must adjust the model or demonstrate a legitimate business justification.

10.4 Governance Structure

Model Risk Committees - These committees provide board-level oversight of AI deployment, performance, and risk exposure. They review models before deployment, oversee performance in production, and ensure compliance with regulatory guidance. Senior management reports on individual model risks and aggregate exposure.

Independent Validation Teams - These teams provide critical analysis independent of model development. For AI, this includes bias testing across customer segments, validating explainability outputs, and confirming models perform as designed under varying conditions. Validation rigor scales with model risk, with high-stakes credit decisions requiring more comprehensive review than lower-risk use cases.

Vendor Management Processes - These processes govern the use of third-party AI tools. Banks require vendors to provide methodology documentation, conduct independent outcome analysis comparing predictions to actual results, and implement controls such as human review of edge cases or secondary validation models.

AI Ethics Review Boards - These boards conduct independent review of AI models before deployment. Models are assessed for data usage, transparency, and bias. Approval is tiered by risk: low-risk models clear at the department level, while high-risk models require C-suite sign-off. This ensures ethical considerations are embedded in the approval process rather than treated as an afterthought.

10.5 Governance as Enabler

Governance enables banks to deploy AI safely at scale. Clear documentation, continuous monitoring, independent validation, and defined approval authority ensure regulatory compliance while supporting controlled deployment. In regulated environments, strong governance is not a barrier to progress—it is a prerequisite for scalable deployment.

11 The AI-First Bank: Where Banking Is Headed

Banks that scale agents effectively will not just improve efficiency. Over time, they will change how financial services are delivered, how decisions are made, and where value is created.

Some industry observers describe this shift as a move toward a “do-it-for-me” (DIFM) model. Rather than using tools to research options, customers rely on AI agents to monitor conditions, identify opportunities, and initiate actions within predefined controls. For example, instead of actively searching for mortgage rates, an agent can track market movements, flag refinancing opportunities, and prepare next steps for customer approval. In this model, the bank’s role evolves from providing decision tools to operating trusted, policy-constrained agents on customers’ behalf.6

Several characteristics are likely to distinguish banks that move furthest in this direction.

1. Always-On Financial Support

Customer interaction becomes more continuous and event-driven rather than episodic. Agents monitor accounts, obligations, and market conditions, and notify customers when action may be warranted. Banking becomes more proactive, but still bounded by customer consent and policy constraints.

2. More Adaptive Financial Products

Product boundaries become less rigid. Instead of managing separate accounts and credit products, customers may interact with flexible financial capacity that adjusts based on usage, risk profile, and preferences. Financing, payments, and savings behaviors adapt dynamically rather than being tied to fixed product structures.

3. Banking Embedded in Other Experiences

Financial services increasingly appear inside non-bank platforms—commerce, travel, and business software—rather than requiring dedicated banking interfaces. In this model, banks provide regulated infrastructure and decisioning capabilities while customer interaction occurs elsewhere.

4. Higher Automation in Operations

Agents take on a greater share of routine service, investigation, and compliance preparation tasks within defined guardrails. Human teams focus on complex cases, oversight, and judgment rather than coordination and manual processing. Staffing models shift, but accountability remains human.

5. More Responsive Balance Sheet Management

Decision cycles around liquidity, pricing, and exposure shorten. Rather than periodic reallocation, banks adjust within approved limits based on changing conditions. Customer-level terms, such as credit limits, evolve dynamically but remain governed by policy and regulatory requirements.

6. Leaner Operating Models

As automation increases, banks can support larger customer bases without proportional growth in operations staff. Scale comes from system design and governance, not unchecked autonomy.

These aren’t incremental improvements—they represent a different operating model for banking. Banks pursuing this transformation systematically will create competitive advantages that traditional institutions cannot match.

12 Conclusion

Banks are moving from AI that generates content to AI that executes work. This shift expands what automation can support in financial services—from reactive service interactions to proactive financial guidance, from manual compliance workflows to assisted regulatory reporting, and from periodic risk analysis to more continuous monitoring.

The architecture outlined here—layered, governed, and designed for agent collaboration—provides a practical framework for this transition. Experience agents improve customer and employee interactions. Domain agents automate structured, multi-step processes. The intelligence layer ensures these activities operate within defined policy, risk, and control boundaries.

Early adopters are beginning to see measurable benefits. Relationship managers report meaningful time savings, compliance teams reduce turnaround times, and stress testing cycles shorten significantly compared to traditional approaches. In some cases, banks applying agentic capabilities across frontline workflows report improvements in productivity, pipeline conversion, and responsiveness.

The advantage does not come from deploying agents in isolation, but from integrating them systematically as part of core operating infrastructure. The question for banks is no longer whether agentic AI is relevant, but how to scale it deliberately—while preserving the governance, accountability, and controls that the industry requires.

13 References

[1] McKinsey. (2025). Agentic AI is here. Is your bank’s frontline team ready?

[2] BCG. (2025). From Branches to Bots: Will AI Agents Transform Retail Banking?

[3] Deloitte / WSJ CFO Journal. (2025). Agentic AI in Banks: Supercharging Intelligent Automation

[4] NVIDIA. (2025). AI On: How Financial Services Companies Use Agentic AI to Enhance Productivity, Efficiency and Security

[5] Scotiabank. (2025). How Agentic AI is the ‘next big wave’ in artificial intelligence

[6] Citi. (2025). Agentic AI - Finance & the ‘Do It For Me’ Economy

[7] Bank of America. (2025). The new wave: Agentic AI - Agentic AI represents a generation of increasingly powerful foundation models that may spark a corporate efficiency revolution.

[8] Morgan Stanley. (2024). AI @ Morgan Stanley Debrief Launch.

[9] Morgan Stanley. (2024). AskResearchGPT helps advisors access 70,000+ research reports.

[10] Jiang et al. (2025). Adaptation of Agentic AI.

[11] MIT Sloan Management Review. (2025). How organizations can achieve big value with smaller AI efforts.

[12] EY. (2024). Document Intelligence Platform.